(Top) Image under-exposed without processing.

(Bottom) Under-exposure with HDR hallucination post-processing.

Nearly 2 weeks ago, I saw something intriguing trickle down to my Google Reader from Microsoft Research, a research paper just published on a piece of technology that could revolutionize digital photography post-processing called “HDR Hallucination“.

Not only was I treated with pages filled with before-and-after comparison but also a great screencast of the technology and tools in-action. I knew from the second I saw the lightbulb example, I wanted to get my hands on this. Two weeks later, I didn’t quite get the chance to play with the software (no matter how much I bribed the lawyers), but I got to do the second-best thing, chatting with the project team themselves. Here is what he had to say.

Who are you? How are you involved in this project?

My name is Li Yi Wei and I am a researcher at Microsoft Research Asia. You could find more information about me on my web page and curriculum vitae.

How did this project come about? Did you wake up one day screaming “we need to make a HDR hallucination tool”?

One day, Kun Zhou, the 3rd author of the paper and one of the most creative people I have seen in my life, told me over lunch that he had a brilliant idea.

HDR imaging and display has been a hot thing in both research and industry, but so far no good solution has been offered for converting LDR (low-dynamic range) content into HDR (high-dynamic range), especially for historical documents where you don’t have the luxury to take multiple photos with different exposures. This is a classical ill-posed and under-constrained problem, and if you use traditional computer vision techniques, it is never going to work.

Since both Kun and I are ‘graphics people’ instead of ‘vision people’, we decide to attack an easier problem: instead of building a fully automatic and robust system (vision approach), we simply build a user-friend tool so that we can leverage human intelligence for good results (graphics approach). My main contribution is to use texture synthesis (one of my expertise) as the major tool for hallucination.

Original image LDR version (left). Original image HDR version (middle). Original image with ‘Hallucination’ processing (right).

By comparison, the HDR hallucination is almost identical to the real HDR.

What is texture synthesis? How does it differ to the clone tool in Photoshop?

Texture synthesis is a technique to produce an output texture from an input sample. The output can be in arbitrary size. For example, the input sample might be a small piece of orange skin (and you cannot get a very big sample due to curvature). See http://graphics.stanford.edu/projects/texture/ for more details.

To my knowledge, the clone tool in Photoshop just copies regions, so the quality might not be as good as texture synthesis, as you need a big enough source region to avoid repetitions.

How many people worked on this project?

Four people have spent significant amounts of time on this project: Lvdi Wang, Kun, I, and another guy named Josh who has early participation but later left the team. Basically, Lvdi (an intern student) did all the coding and Kun and I (and Josh) built ideas and algorithms.

How long did it take to develop?

We started this project sometime around October 2006 and finished it up around Jan 2007. It was a quick and horrendous push.

What were some of the challenges developing this tool?

We had this idea that we want to ‘hallucinate’ HDR contents, but initially we didn’t really know how.

We first consulted opinions from our computer vision colleagues but they told us this problem is “impossibly difficult to solve”. So the first (mental) obstacle we overcame is to solve it by an interactive GUI tool as opposed to a fully automatic system that vision people prefer. The second challenge is to decide which tool sets we should provide in the GUI to balance usability (as few and simple tools as possible) and power (capable of achieving many effects).

After some trial and error we settled down on 3 tools: illumination brush, texture brush, and warp brush.

What scenarios does your tool work best for? What doesn’t it work for? Can you overcome any of these problems in the future?

The performance of our algorithm primarily depends on the availability of good textures; i.e. if there are well illuminated areas in the image from where we could borrow textures for over-exposed regions, our algorithm will do fine. Otherwise there is nothing we could do.

A potential future work is to borrow textures from another image like we did for the fire example, but it is obviously not as straightforward as using a single image.

Texture sample (left). Original image (middle). Original image with ‘Hallucination’ processing (right).

The technology obviously works very well at the moment, are there plans to improve it even further?

We are not 100% sure yet if it is really as user friendly as commercial products, say, Adobe Photoshop. For now we will concentrate more on the user experience. In the mean time we are also trying to come up with a demo product to show around internally.

Is human input always required? Would it be possible in the future to have a one-click solution to automatically add HDR hallucination?

Human input is almost always needed. There are some cases (as shown in our paper) that are fully automatic, but these are exceptions rather than norms. One-click solution might be possible, but we think it is very hard.

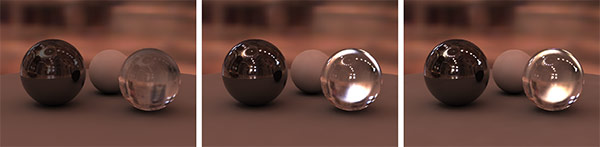

Original image under-exposed (left). Original image under-exposed with ‘Hallucination’ processing (right).

I’m a little blown-away by the light-bulb example. How did the software synthesize the lighting figment in the light-bulb?

The filament is painted by the user via an illumination brush. So it is quite easy. However, the texture within the bulb is done by texture brush; this is a subtle effect but without it the result will look unrealistic (too clean).

Has there since been any interest from Microsoft or other parties to integrate this technology in their products?

Not yet. We haven’t really pushed it – haven’t even showed to any product people. We plan to demonstrate it in the next Microsoft TechFest (~ March 2008).

What’s the next step?

We haven’t thought much about that. We definitely would like to see it turn into real products, so talking to product people is currently our next step. On the technical side I would like to pursue more practical applications for texture synthesis; I have been working on this topic since 2000 and so far I haven’t see any real practical applications.

————————————————————————————————————————————————————————-

I wish Li-Yi Wei and the rest of his team the best of luck to get their great idea fine-tuned and implemented in a consumer application as soon as possible. I have no doubts countless amateur photographers will appreciate just how powerful and useful of a tool this is for them to drastically improve the look of their photographs taken in less-than-ideal environments.

I admire anyone who can say ‘leverage’ instead of ‘use’ without wincing