Several days ago, some Windows 7 users made an interesting observation that the Windows 7 disk checking utility consumes a large amount of memory in a very short time. This story has since gained momentum in the blogosphere under the snowball effect leading to the sensationalist belief this was a “showstopper” bug for Microsoft.

Whilst I agree there is some merit to debate the technical aspects of this phenomenon – why this process functioned properly in previous versions of Windows without much RAM, at the same time I’m surprised at just how many users still in this day and age are superfluously concerned about resource utilization, which I’m coining “resource-spotting” – a nod to trainspotting. To put that in context, if you ran this tool without the Task Manager side-by-side, would you be none the wiser?

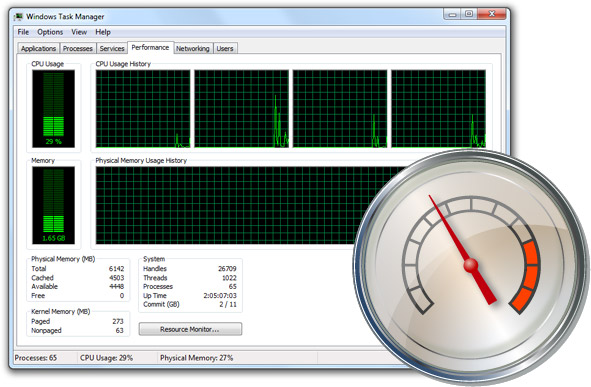

Time and time again, I come across people who watch their Task Manager window like if it’s the Olympics 100m sprint. The same people who probably also have the “CPU Meter” gadget on their desktop. I admit there was a time when it might have been useful to minimize the “baseload” of CPU and RAM utilization to maximize performance, but with the prevalence exponentially more powerful hardware and more preemptive resource optimizations, it’s just no longer practical or relevant.

Take for example Windows Vista and Windows 7. These are modern operating systems with a number of background services that aim to optimize performance by identifying and precaching resources you may need to use before you use them. For this reason, around 70% of your RAM when idling (in my case 4GB out of 6GB) is actually utilized for caching. Of course when other applications need RAM, the cache is automatically reduced to accommodate other processes.

Another common example I see often these days is people complaining about Firefox’s memory footprint, or any next-generation browser for that matter. As a fulltime Firefox user, I confess Firefox is my most memory-consuming process for the most of my day, but I’m not at all concerned about this and why should I? If I can keep open dozens of tabs open which are all equally responsive to switch between and AJAX-heavy webpages load and perform extremely well, why should I use a slower but less memory-intensive browser if I have the memory to spare?

To me, what’s more disappointing about resource utilization is under-utilization. If I have the resources to spare, at any point in time where performance can be improved by utilizing more resources but isn’t, that’s a much worse deficiency of modern computing than a large number in a table of numbers.

Agreed. Thats why I hate people complaining about Vista being a memory hog.

I agree. There are too many old-school thinkers concerned that they might actually be utilising all the hardware they paid for.

I completely agree with this sentiment. I never understood why people who have 4 GB (or even 8 GB) of memory care that their browser, media player, or other application uses 100 MB of memory.

I’ve grown tired of people moaning about “excess memory usage” especially when they have tons of RAM. I really don’t see a reason to monitor resources unless you feel your performance is below par.

I quite agree with you. Though, I still cant’ figure out why TweetDeck needs 119 megs of ram. For thumbnails and a bit of text? 🙂

Totally, totally agree. The whole keeping track of resources thing is annoying, but complaining that 2GB of your 2GB RAM is being used is just retarded.

What an odd article, to compare “useful typical resource utilization” with an aggressive bug in both chkdsk and the integrated disk scanner that empties the available RAM in a few seconds, including on Windows Server 2008 R2, according to reports. The implications for this and a system utilized by e.g. 20 users sharing 1% of remaining CPU due to this bug, put in relation to this article, turns into comedy.

Btw, “CPU” should be “RAM” above, but my point still stands.

@Jug: Why does it matter the chkdsk tool utilizes all of your RAM when it runs? If you don’t put it side by side with a task manager, would you even know it’s using all of your RAM?

i’ll agree that not using all your resources is silly, but here’s how i feel: despite having extravagant amounts of resources, my general computing experience doesn’t feel any faster than it did 5 or 10 years ago when using a modern OS on modern hardware. i suspect other people have a similar experience to me (or worse, since the average consumer is probably running the latest OS on old or cheap hardware).

i think people generally feel that with massive resources and the newest OS, their computing experience should feel fast. when it doesn’t, they look for the things which are “hogging” their resources and causing bloat–500+mb instances of firefox, seemingly meaningless tasks and services running in the background, etc. They want to know why they aren’t seeing any improvement, why they are upgrading only to get the same performance they were before. The average person doesn’t care about RAM, CPU, how long it takes to calculate PI to X decimal places, or how many polygons can be drawn in a second. They just “feel” their computing experience is slow and inadequate, so they look for reasons.

The reason for complaining about Firefox’s (or any other individual application’s) memory usage is that people tend to *multi-task*. If I’m using and it wants 2.5Gb of memory and then comes along and wants another 2.5Gb of memory, then my machine with a mere 4Gb of memory isn’t going to be able to cope with both programs at once and one of them will find themselves with their memory swapped out to disk occasionally, negatively affecting performance.

Now this doesn’t mean I’m against applications using up as much memory as they like. The problem is that *badly written* applications might not give that memory up again, or might not recognise that there’s another app that needs the memory. One badly written app can negatively affect everything else. And besides, it’s just bad practice to write software and not worry about the resources it uses – it assumes that everyone is running your hyper-machine set up of 24Gb RAM and 6Ghz 32 core CPU, when in reality there are still people out there using 286s.

@BenN: I agree if an application is badly written and using RAM for no purpose whatsoever it needs to be addressed, but personally I see a clear performance benefit of using Firefox even with its large memory footprint.

Even on my laptop with 2GB of RAM, I haven’t come across any cases where Firefox has negatively impacted the performance of other applications, including resource intensive applications like Photshop.

I don’t agree.

If people start to not bothering anymore about mem usage, some developers wouldn’t want to sacrifice any more time optimizing the app for the lowest mem usage possible.

This would lead – eventually – to lots of resource hogs, till the point people start bothering again because things got out of hand. My point is: It would become a vicious circle so it’s better to keep watching our daily apps, even with all this powerful hardware we have nowadays.

I just noticed my comment skipped out on some tags…

” If I’m using and it wants 2.5Gb of memory and then comes along ”

should read

“If I’m using <Generic-Browser> and it wants 2.5Gb of memory and then <Generic-Word-Processor> comes along”

Never use angular brackets on the web… lesson learnt.

You should switch to Google Chrome, it’s way heavier, and faster.

Not everyone is on a Nehalem processor stuffed with RAM. I am, but to generally go against people who keep an eye on Task Manager is silly.

@xaml: But even if you’re on a low-powered computer such as an Atom netbook, looking at the task manager won’t make your machine any faster than it can be either.

I think programmers have a duty to run a CPU meter on their desktop so that they notice when their own code consumes too much CPU. (Something that is harder to notice in these multi-core days, too… Now if your code gets into a 100% CPU loop the system doesn’t slow to a crawl and a CPU meter is the only way you’ll normally see it.)

Similarly, it’s good for programmers to keep an eye on their own processes for memory leaks or unexpectedly high RAM usage.

I totally agree that there are a lot of people who get too worried about this stuff, though. They’re the same people who complain that an executable is a few meg in size these days, as if exes still have to fit on a floppy disk and as if the operating system loads the whole thing instead of just the parts which you actually use. The days of a few KB mattering are gone, except in very special cases, and it’s far more important for programmers to concentrate on features rather than reducing a number in Task Manager to no noticeable effect.

People don’t seem to understand that the OS will page unused data out to disk. High memory usage generally only causes contention if that memory is being actively used and kept in physical RAM.

This chkdsk thing seems fine to me, provided it backs off its (physical) memory usage if other processes start to require more (physical) memory. When we say it’s good not to leave resources unused (which is true), it’s important to remember that previously unused resources may be in contention a moment later when the user starts something else going.

A lengthy background process shouldn’t assume it’s the only thing running on the machine and allocate all of the (physical) memory, for a minor performance increase, unless it’s prepared to give memory back the moment it’s needed. (I’m not claiming that chkdsk does/doesn’t do this, though. I don’t know what it does. Just a general comment.)

…Having said all of that:

I stopped using the Vista sidebar due to its resource consumption. I expect something like the sidebar to use minimal CPU and memory because it’s doing something very simple and always running in the background. It should be lightweight and not get in the way of other more important processes.

A lot depends on which gadgets you use but the sidebar’s design seems to encourage (not force but encourage) people to use techniques which waste CPU and memory and which have to poll instead of using events, etc.. Finding the two sidebar.exe processes each using several hundred meg of RAM and noticable CPU was annoying. (I also disliked the visual inconsistency of different gadgets. Even ones based on the same themes didn’t quite line up right. Many didn’t have common themes at all.) I ditched the sidebar after about 18 months and switched back to Samurize (made to look like a sidebar :)) which gets the same job done far better, IMO.

Quote:

The average person doesn’t care about RAM, CPU, how long it takes to calculate PI to X decimal places, or how many polygons can be drawn in a second. They just “feel” their computing experience is slow and inadequate, so they look for reasons.

And in the vast majority of cases, the culprit is … the hard disk. Modern CPUs are so incredibly fast that instructions are comically cheap to execute, and RAM is a problem only if you see active disk swapping. The disk, however, is only approximately a million times slower than CPU-cache latency. From my personal experience, that’s why a a good solid-state drive (SSD) so fundamentally changes the computing experience.

When you work for a company that just bought laptops that have 2GB of memory that they will not upgrade and all of microsofts products take up more and more memory every update you might start to care.

Long, superb post. My wife, for naming one non-tech person, does not care at all if IE8 is consuming a lot of machine resources if she can get the job done. And even in a resource exhaustion scenario, she simply will turn off and turn on the machine again.

Exactly. That’s why I always say when people complain about CPU utlilization in the 70-80% range: “You paid for this wonderful many-core, high speed wonder of technology – and now you want it to sit idle all the time? Make it do what you bought it for – crunch those numbers!”

If the user is more aware of what goes on on the PC, I think that should be supported. There’s Task Manager, but there’s also the Programms screen, or the Add-on screens in today’s browsers. Fortunately, technology still is continuing forwards pretty fast, as per Moore, yet people tend to stick with habits a little longer. But back to the Check Disk utility, if it was designed to use a lot of RAM, then it should be reflected someway to the user. Be it through an updated description or a message. That would have taken some of the fire, apart from the blue screen error.

Why are we hearing so many reports of a BSOD when chkdsk /r is run on secondary disks then?

people is just discovering their faulty RAM modules with that chkdsk ‘bug’. Also disabling paging file is and always be a stupid move.

There is a fine line between making use of resources and ending using up resources. Microsoft apps tend to be more on the latter side compared to other industry apps of the same era. Usually every release is more bloated and less responsive.

Only partly agree. Of course it’s not the time to count single MBs of RAM any more.

On the other hand I regularly get the “there isn’t enough physical RAM available” dialog in Windows which proposes to close IE – which consumes >800 MB of private pages for a single tab! Closing IE and reopening it with the same page afterwards shows the standard consumption of a few megabytes. BTW, same for FF.

So yes, I DO care about memory consumption, because that dialog box is really annoying and I prefer to have a browser that doesn’t eat up my memory.

@Ooh: Configure always the page file to 2,5X the size of your RAM. I have never seen that dialog with this.

sorry, I mean 1,5X the size of RAM. In my case, I have 1,5Gb and Windows need a 2300Mb page file to be ok.

“People don’t seem to understand that the OS will page unused data out to disk”

and, indeed, a lot of that kind of RAM usage can actually be memory-mapped disk IO – without bothering to check, I suspect that is the case in the chkdsk example – which doesn’t even need to be paged out (because the OS knows it’s already available on the disk), it just needs to be reloaded when needed

The issue was a rare bug resulting in a BSoD from using chkdsk.

@Phusky Phunsar: No one I know has been able to recreate a BSOD from this bug. Yes it uses lots of memory, but your system remains stable and responsive during the operation.

I disagree. It’s like saying, “I make $2,000 a month, so it’s okay if I spend $400 on toilet paper.”

@YetAnotherPC: No that’s a very poor comparison. The fact is you’ve already spent a fixed amount of money on the RAM in your PC. That does not change how you use it. However if you’re only ever using half of the RAM you have, then you have pretty much wasted half of the money you spent.

While I agree with most of your article, I can certainly provide you with a reason to monitor CPU utlization. I have a newer laptop. Like most modern laptops, it gets HOT. Out of nowhere, fans start churning and the thing heats up. Task Manager/ Resource allow to me to find out which process is utlizing the CPU and burning my lap. and quickly kill it, or restart IE/firefox. Flash ads, or videos are a killer.

U really think that apps are equal faste 10 years ago and now? … they… look the same speed but they load a hole lot of new features… and power… and u didnt lost performance.

This while it doesnt look as a gain in performance, indeed it is…

Lets put it this way…

U are driving at 60 mph, and another car, comes and pass at ur side at 70mph… the real speed of the car is 70mph but, ull only notice that it goes 10mph faster… ull see that amount of difference…

Sames happends here… u only see 10mph… of diference… but u are not seeing its a big truck running at the ur 60mph plus 10 more…

The point is… we dont see the full picture… we only “feel” something… but there is a lot more stuff involved.

About the memory consuption… ill have to agree… its pointless to have a mem idling… difrent is maybe with procesors and disks.

Anyway the most important point here to notice is… Dont u see that u are running lots of apps… with lots of features… visual effects and all that… and despecite of that its faster than old apps… i see that as improvement…

And still.. i think there is “limitation” in the speed in wich the OS could run and still feel confortable… i prefer smooth effects and clean nice transitions between the apps… it feels friendly that way.

Don’t overlook the flip side of the coin: these same users are so easy to manipulate. Just call yourself “uApp” and make frequent mention of how “lightweight” and “fast” your app is in your press releases. Everyone will eat it up and revere you as some sort of master programmer because you used UPX on your executable and compressed it to 50% of its original size.

I will have to agree with BenN: I see that inspite of all these modern processors and OS, I haven’t seen noticeable improvement in the way I deal with apps. While Gonzalo notes that today’s app comes with packed features, I want to make a sweeping remark that today’s apps have atleast 20% features that could be dispensed with – well that’s based on general observation; I don’t have specific data to support. BTW, more features need not mean more resource consumption.

Nonetheless, it has become a habit to me looking at taskmanager as to who is consuming how much resources, and try to take action at those who seem to use disproportionate use of resources. And why do I do it? From my experience, if the memory meter shows more than 50% consumption, invariably I have seen drop in performance (some might want to disagree). Yes. I feel bad because the money I spent on memory is probably used to that extent – but I need it to have my apps running ‘normally’.

Sorry. I was quoting Ben’s comment. Please read BenN as Ben.

It depends on the context. If developer profiled her code and intentionally trades memory for performance then it’s cool and desired.

But I don’t see this happen. All the time I see perfect correlation between careless usage of resources and buggy code. For instance, Microsoft WMDC at the same time is the buggiest and most memory consuming application in Windows after clean install. Its two parts take more memory than Visual Studio 2008 with a project loaded.

Windows uses memory for caching. Badly written application forces Windows to give this memory to this application thus increasing response time if the user wants to launch something that was removed from cache.

Besides high memory usage, application might also have open (or even leak) other system resources like threads, window or file handles. Until Intel can demonstrate low-end CPU running at 10GHz, these resources are scarce and managing them is not free for OS.

One might have 16GB of RAM but only 1MB of L2 cache. Oh, and how could we forget about locality of references? Because of dramatic difference in performance between RAM and L1/L2/L3 caches, reducing memory footprint of application may actually improve performance, even if algorithm is slower. There is no solution for this problem in the nearest future so unjustified resource usage should not be tolerated.

In the end, some of the code consuming memory on local machines is going to be executed in the “cloud” and there will not be “unused” resources.